In this post I’ll cover why I set up a local Jenkins server, and what benefits you might gain by doing the same. This post is probably most suited for developer types, but you may try it if you have many repetitive tasks.

What it looks like in action

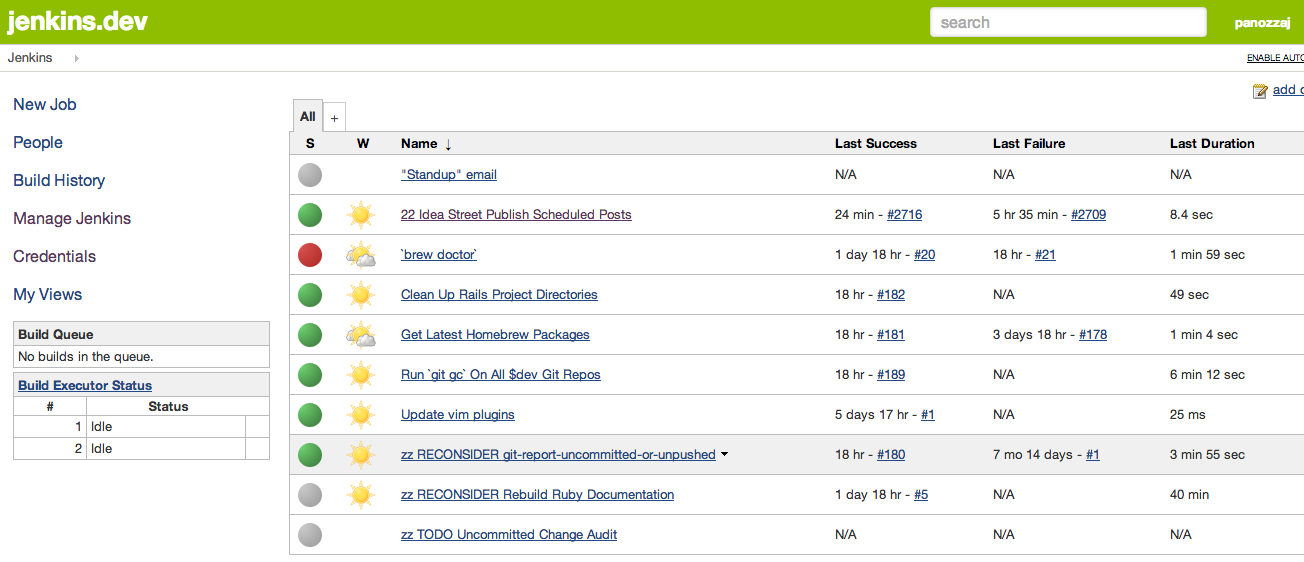

Here is what my current Jenkins dashboard looks like:

You may notice that it seems more aesthetically pleasing than other Jenkins installs. This is due to using the Doony plugin, a series of UI improvements to Jenkins that make it much better to use. My favorite is a “Build Now” button that will kick off a build and take you to the console page to watch the build (typically a three or four step process otherwise.) This is handy for testing out new or newly modified jobs. The console output itself looks much better as well. Doony even has a Chrome extension so if you work in a stuffy office, then you can get a sexier Jenkins just for yourself.

What I specifically use it for

There are many things that need to be done to keep a computer system running at its best, and I try to automate as many of them as possible. With a local Jenkins server, I can do this and easily keep tabs on how everything is running.

One easy application is to keep the system updated. On Linux, you’d sudo apt-get update && sudo apt-get upgrade -y. On Mac, running brew update && brew upgrade nightly keeps me running on the latest and greatest. Anything that needs backwards revisions of software (databases, etc.) should have its own virtual environment or be running on the latest anyway.

On a similar note, I pull the latest plugins for Vim. I have over forty git submodules in my dotfiles, most of which are Vim plugins. Running this command keeps me running on the bleeding edge to pick up bug fixes. It also ensures that if I try to clone my dotfiles on another computer that it will work as expected.

I blog with Jekyll and publish scheduled blog posts by running a script hourly that checks for posts that should be published and publishes them. This is pretty consistent and publishes posts within an hour of when they should be published assuming my laptop has an internet connection. If not, probably not a big deal, they will just be published the next time around. This is also handy since I use the AWS credentials stored on my local computer.

To keep things running fast, I have one job that runs git garbage collection on all of my repos nightly as well. (See this StackOverflow post for potential tradeoffs of doing this. I haven’t seen any negative effects so far.) I also have one job that goes through Rails projects that I have and clears out their logs and upload directories. This keeps searches faster and more relevant by trimming log files and attachments, many of which are generated when doing automated test runs.

Last, I run diagnostics. brew doctor ensures that I don’t have any dangling Homebrew installs or ill-fated link attempts. As is standard, the command returns a zero exit code if it succeeds, and something else if it reports anything, which makes it easy to script with Jenkins. I typically just fix the issue in the morning and kick off another build.

Why is it better?

The cron ecosystem is typically the standard way to schedule tasks. However, there are some shortcomings with using cron for this:

- hard to debug when things go wrong

- the job itself might fail silently

- lack of consistent logging or notifications

- typically need to log console results to a random file

- inspect that file randomly (remember where it is?)

- hard to link together multiple scripts

- tough to have RVM support

- need to set up paths correctly or else…

- likely little history of the job and its configuration

- emails might get sent out… or they might not

- by default emails are only sent locally

With Jenkins, you get the entire history of the job with full logs, and can tack on notifications as needed. You have customizability by adding various plugins for interacting with Github repos, seeing disk usage, emailing specific users when builds fail or are unstable. There is a graphical interface for seeing and changing the jobs, and you can version this. Jenkins has some memory overhead, but not much. I think the improved interface is worth it.

I think automating all of this probably saves me time in the long run. I would do it even without Jenkins, now I just have a better interface.

I take the pain early on broken Homebrew packages or Vim bundles. I can more quickly change these, and other developers that I work with can benefit from me seeing the problems first. Running on the bleeding edge provides early warning when a Vim git repository changes or gets deleted, as I typically find out the day after updating. I’d rather have a few small fixes over time than a bunch of fixes when I need to update a critical package.

Lastly, I get better at administering Jenkins, which carries over to other domains like continuous integration / continuous deployment. Building automation muscles.

Setting it up

I covered installing a local Jenkins server in a previous blog post. Check it out for some more thoughts and why Jenkins LTS is likely the way you want to go for local Jenkins installs.

I also change the port from 8080 to 9090 since I sometimes test other Jenkins installs (for pushing up to other servers with Vagrant.) Since I am running on Mac I also use pow for setting it up so I can say http://jenkins.dev to point to localhost:9090:

# echo 'http://localhost:9090' > ~/.pow/jenkins

Some other automation ideas

I plan on outfitting Jenkins with a few more jobs, as time permits.

One job is a script that will go through my git repos and figure out which changes are not committed or pushed across them, along with checking stashes to see if there is code lingering in them. I would like to lessen the time it takes from value creation to value realization. Plus, having unpushed code is typically a liability in case something happens to my computer overnight. The job could email me a summary of this every day so I can take action on it in the morning.

I would like a “standup” script that summarizes what I did the previous day across all projects. Here are some code sketches that are good inspiration:

I’d like to automatically calculate how many words were written across all git repos that are associated with writing. Basically doing a plus/minus/net words written in Markdown files. I have a semblance of a net word count script already, I would just need to wrap it with a list of repos and run daily at midnight. The script would also encourage me to commit my writing more regularly since I know it would affect the stats.

It might be nice to rebuild Ruby documentation for all installed gems in case I am without an internet connection, although I am not sold on the value of this yet. It seems rare these days that I don’t have a connection and need to look things up.

You could use Jenkins to automate things like:

- checking websites for broken external links

- pulling down data from servers or APIs

- sending you email reminders

- mirroring websites

- checking disk space

- running cleanup or backup scripts

The nice thing is that it shouldn’t take long to do most of these, and you can see the history.